Foreword

Recently, as the new generation of AI chatbot ChatGPT released by Open AI has spread all over the technology circle, the field of Artificial Intelligence Generated Content (AIGC) has begun to receive wider attention from academia, industry and even ordinary users. With its unique “creativity” and creation speed beyond the reach of human beings, AIGC has set off a frenzy of artificial intelligence. Even in news reports, AI works have won prizes and AI paintings have been auctioned for millions of dollars; and At the same time, domestic and foreign companies such as Kunlun Wanwei began to compete to deploy AIGC, promoting AI painting, AI composition and other products to be widely used in content creation fields such as art, education, and culture, becoming a unicorn company. When the “creativity” that humans are proud of begins to be touched by AI, while we are excited, will we have concerns that artificial intelligence will replace humans?

To answer this question, we first need to understand artificial intelligence, understand AIGC and its latest research progress. Therefore, in this article, we will introduce what artificial intelligence and AIGC are, and explain the current industry-leading AIGC model and products of Kunlun Wanwei. Finally, we will further answer whether we should be worried about the “creativity” shown by artificial intelligence.

1. Artificial Intelligence and AIGC

1.1 Introduction to Artificial Intelligence

Artificial Intelligence (AI) is a systematic science and technology that studies theories, methods, and applications for simulating and expanding human intelligence, which enables computers to execute corresponding strategies based on available data without explicitly programming strategies. AI makes machines intelligent by using computer programs to simulate human behavior. Over the past few decades, due to the exponential growth in the quantity and quality of available data, and the rapid development of high-performance computing devices, artificial intelligence has made great progress in areas such as image recognition, natural language processing, recommendation systems, and autonomous driving. breakthrough progress. The goal of artificial intelligence is to build powerful intelligent models that can manipulate input data to predict output, while constantly updating the model as new data is added.

The goal of artificial intelligence is to create intelligent machines that can think similarly to humans, or to extend human intelligence through artificial intelligence technology to solve practical problems. In the past few years, many artificial intelligence systems have made breakthroughs and can be applied to solve various complex problems. The deep neural network model can already perform pathological analysis on X-ray images of the human body at a level close to that of a doctor, and beat human master players in classic board games such as Go and multiplayer competitive computer games, but in fact, the application of artificial intelligence is far from More than these.

1.2 Artificial Intelligence and AIGC

At present, we can divide artificial intelligence models into two categories, discriminative models and generative models. The AI discriminative model requires a set of input data, such as English text, X-ray images or game screens, and after a series of calculations, the corresponding target output results are obtained, such as word translation results, diagnostic results of X-ray images, or the next moment in the game. action. Discriminative models are probably the most familiar class of AI models, the purpose of which is to create a mapping between a set of input variables and a target output. The target output can be a set of discrete values (such as predicting the next occurrence of word vectors) or continuous values (such as predicting what a customer will spend in a store within a certain period of time).

Generative models do not calculate scores or labels for input variables, but generate new data. This type of model can accept vectors (even random vectors) that have nothing to do with actual values, and generate complex outputs, such as text, music or image. Artificial Intelligence Generated Content (AIGC) generally refers to content generated by applying AI technology, including text, pictures, and even code and video.

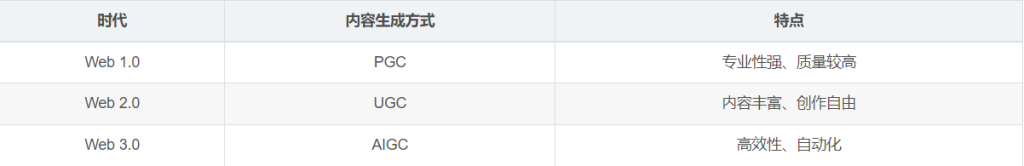

1.3 Entering the era of AIGC

AIGC is a new generation of content generation method following Professionally Generated Content (PGC) and User Generated Content (UGC), and it is a content generation method that automatically or assists in generating content through artificial intelligence technology. It has the characteristics of high efficiency and automation. The rapid development of natural language generation technology (Natural Language Generation, NLG) and AI models has promoted the application of AIGC.

It is not a coincidence that AIGC can explode at the current time, but an inevitable result of technological development and changes in content production needs. When the related concepts of Metaverse were put forward, an important question also emerged in the development process of Metaverse, that is, how to generate a large amount of digital content in Metaverse? With the rapid development of AIGC and the capital market joining the AIGC track, AIGC will be able to greatly assist the development and landing of the metaverse, and AI will be able to generate or assist in the generation of massive content in the metaverse.

The deep learning generative model has made rapid progress in the past ten years, and a large number of related papers are published almost every day. In 2014, the emergence of Generative Adversarial Networks (GAN) and its variants first triggered the Discussion on the ability of artificial intelligence creation; in 2021, the CLIP model was proposed, and in the same year OpenAI launched the DALL-E model, which has the ability to generate content interactively between text and images; in 2022, the deep learning model Diffusion appeared, which directly promoted AIGC The breakthrough development of technology has solved the disadvantages of difficult training of generative confrontation network and rough generation results. Many applications based on Diffusion model are showing a blowout trend. Therefore, many people call 2022 the first year of AIGC.

On the track of AIGC, OpenAI, which is currently at the top, has been valued at more than 20 billion US dollars. According to the “China AI Digital Business Outlook 2021-2025” report, the scale of China’s AI digital business core pillar industry chain will reach 2025 by 2025. 185.3 billion yuan, of which the scale of the AI digital commercial content industry will reach 49.5 billion yuan, accounting for nearly 27% of the entire industry chain.

As China’s leading Internet platform company going overseas, Kunlun Wanwei has always had a keen insight into the market and innovative business, and has deployed AIGC business early, leading the industry in the fields of AIGC drawing, text, programming and music. Overseas information distribution and metaverse platform Opera, overseas social entertainment platform StarX, global mobile game platform Ark Games, leisure entertainment platform Leisure and Entertainment and other sectors have gradually formed around the world, providing global Internet users with a wealth of social , entertainment and other information services, and cooperated with the partner Singulato to launch a full series of AIGC models and algorithms of “Kunlun Tiangong”. field.

2. Text generation model

Text is the most basic and important form of content. In terms of AI-generated text, the current AI model can already complete many creative activities that were previously considered human privileges, such as writing poems, writing advertisements, scripts, novels, and even chicken soup for the soul. At present, OpenAI’s GPT-3 model is the most manifold and mature model in AI-generated text, and some organizations even conduct experiments, allowing GPT-3 and undergraduates to write according to specified prompts at the same time, and submit them to the professors for scoring. As a result, the articles generated by the GTP-3 model passed most of the writing tests of the course, and only took 3-20 minutes, and most of the time was spent editing the output length of the text and repetitive text.

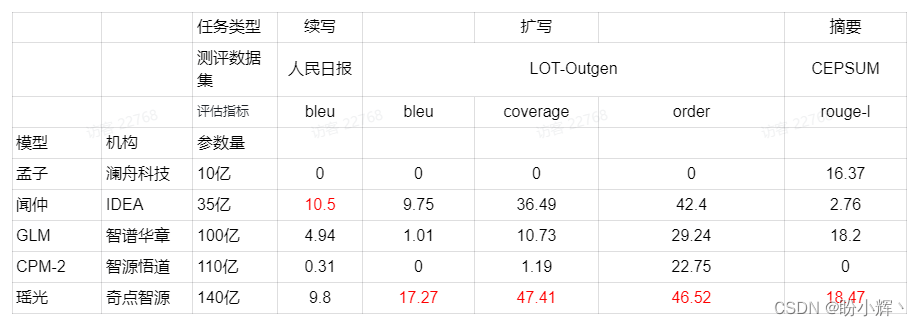

Recently, there have been many commercial products based on the GPT-3 model, the best of which include the Yaoguang and Tianshu models jointly launched by Kunlun Wanwei and Singulato. Both models can understand and generate natural language. The former The performance of is relatively stronger, and it is suitable for applications that need to generate a large amount of content, while the latter is suitable for scenarios with higher real-time requirements.

Compared with Yaoguang model, it only needs less context to complete the task, and at the same time, it can solve some of the most challenging artificial intelligence problems involving causality. The long text generated by it has stronger internal logic. It has excellent performance in tasks such as question answering and chatting. Although Tianshu is slightly inferior to Yaoguang in complex text generation tasks, it also performs well in small sample tasks and is faster.

The Kunlun Tiangong model has constructed a high-quality data set of 100 billion levels for the Chinese field. Through high-performance a100-GPU cluster training, the GTP-3 generative model with 10 billion parameters has been obtained, which can be applied to almost any field that involves understanding or generating nature. For the task of language or code, a series of models with different parameter levels are provided at the same time, which can be adapted according to the same task. At the same time, the model can also be fine-tuned to obtain a generation model that suits the needs. The following table compares the current popular models in the field of Chinese text generation in multiple data sets. It can be seen that Yaoguang has obvious advantages in multiple text generation tasks.

At the same time, Kunlun Tiangong’s AI text generation model can be applied to a variety of downstream tasks, such as continuation, dialogue, Chinese-English translation, content style generation, reasoning, poetry couplets, etc., covering almost most of the application scenarios of text content generation.

Taking content continuation as an example, the text generation model provides a very simple text input and text output interface, which provides powerful content generation capabilities while ensuring flexibility. We only need to enter some text as a prompt, and the model will automatically generate a text continuation to try to match the input context or pattern we provide. In addition, we can also adjust the generated results from the length of the generated text, etc. .

In the application of continuing to write silent verses, Kunlun Tiangong AI model can even give very accurate punctuation. As shown in the figure below, when the introductory sentence should be followed by a comma, the model accurately generated the comma and obtained a neat continuation of the poem.

After experiencing the power of Kunlun Tiangong’s deep learning model for text generation, we certainly can’t wait to apply it to project practice. Kunlun Tiangong provides a rich text generation API for different professional downstream tasks, supporting JSON, Various programming languages such as Python and shell, and provide detailed API function descriptions and usage examples, so that we can apply them to projects. Taking the generation of five-character poems as an example, it is very simple and convenient to use the Python API to call Kunlun Tiangong Text to generate a deep learning model:

import requests

import json

url = “https://openapi-dev.singularity-ai.com/api/generateByKey”

headers = {

“Content-Type”: “application/json”,

“App-Key”: “your apiKey” # 替换为自己的 API Key

}

data = {

“model_version”: “模型版本”,

“prompt”: “闻道巴山里,春船正好行。都将百年兴,一望九江城。\n水槛温江口,茅堂石笋西。移船先主庙,洗药浣沙溪。\n迟日江山丽,春风花草香。泥融飞燕子,沙暖睡鸳鸯。\n寒食少天气,东风多柳花。小桃知客意,春尽始开花。\n清风入堂来”,

“param”: {

“generate_length”: 100,

“top_p”: 0.1,

“top_k”: 10,

“repetition_penalty”: 1.3,

“length_penalty”: 1,

“min_len”: 2,

“temperature”: 1,

“end_words”: [

“[EOS]”,

“\n”

]

}

}

response = requests.post(url=url, headers=headers, json=data)

dt = json.loads(response.text)

print(dt)

3. Code Generation Model

Code generation has always been considered a complex challenge, because code generation has a high internal logic, not only needs to be able to be generated, but also to be able to run, otherwise it will just generate a bunch of code that cannot run It is completely putting the cart before the horse. The most important purpose of code generation is to help human programmers reduce the amount of code writing tasks. If the generated code has no meaning, it will increase the burden on programmers.

However, with the advent of AlphaCode, humans have seen that code writing may no longer be the exclusive domain of programmers. In the dozens of results of AlphaCode’s participation in the Codeforces algorithm competition, its ability surpassed more than 50% of programmers, as shown in the figure below A model architecture diagram of AlphaCode is shown.

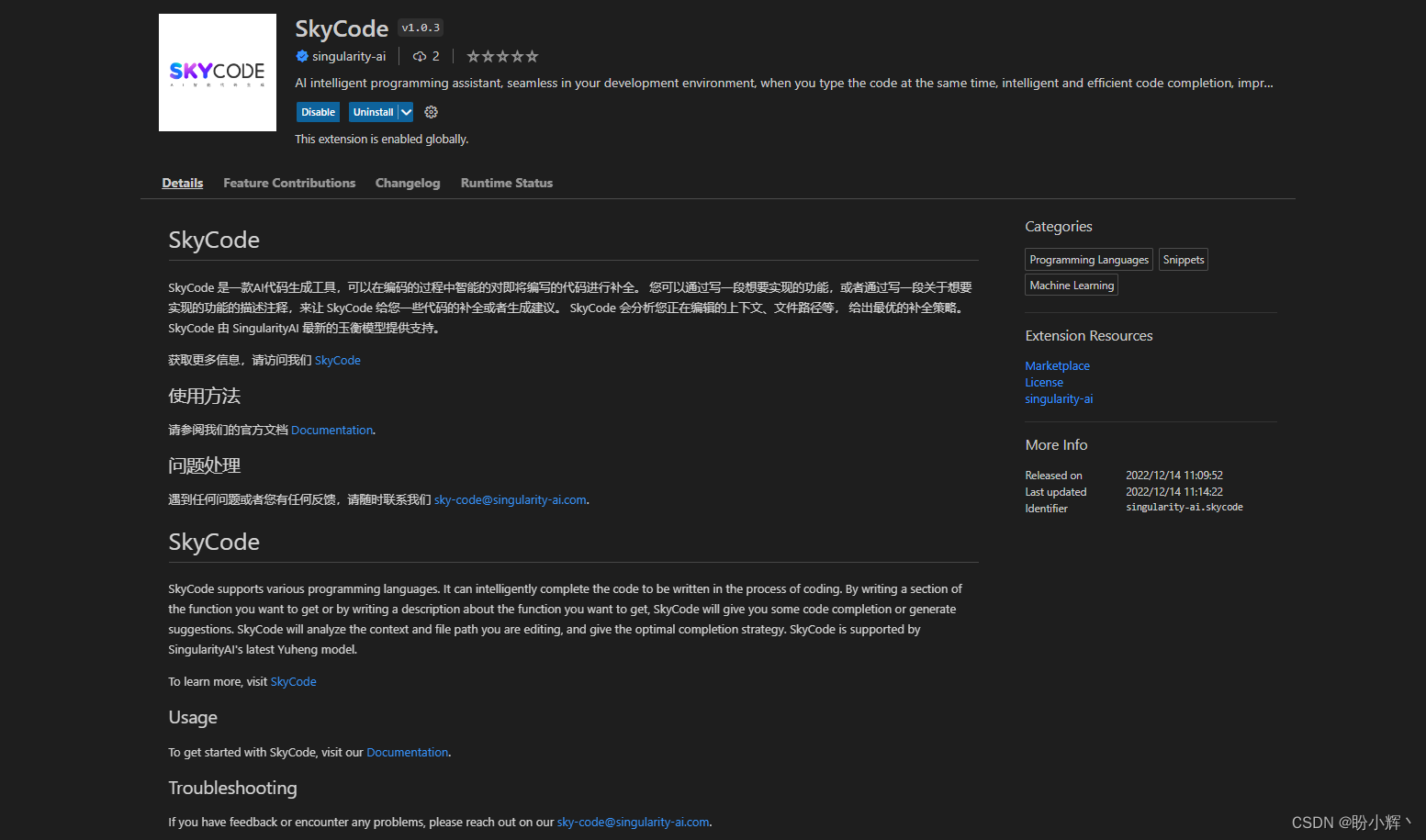

In the track of AI code generation, Kunlun Wanwei has trained the world’s first multi-language open source programming model Sky-code code generation tool, which supports various mainstream programming languages, including java, javascript, c, c++, python, go And shell and other programming languages can help developers code faster and better, and even the model can output more than 100 words of code per second. Sky-code has excellent code continuation ability. It can not only continue to write code according to code annotations, solve algorithm problems, and make deep learning model problem solving no longer just fantasy. At the same time, it also supports continuation of code according to Chinese annotations, which is for most For developers with poor foreign language skills, it is even more helpful. And the code quality of the Sky-code model is very high, the following table compares the performance of the Sky-code model with other code generation models:

The generation pass rate of the model is the most important capability measurement index of the code generation model. According to different problems in the data set, the code generated by the model needs to pass the unit test before it is considered to generate correct results. In the above table, according to the number of runs, the indicators are divided into single run pass rate (k=1), ten run pass rate (k=10), hundred run pass rate (k=100), etc., you can see In the 40 Samples data set, the pass rate of the Sky-code model is even close to 85%, and it has fewer model parameters than other models. It is not difficult to conclude that the Sky-code model has a higher operating efficiency. reason.

Sky-code can intelligently complete the code during the coding process. Using this model, we only need to describe a function function or the program result we want to achieve, and Sky-code can analyze the context of the current editing code file. Give code completion or suggestions.

Take the completion of Python code as an example. As can be seen in the above figure, we only provide the comment part of the code, and the Sky-code model can automatically complete the entire function. More friendly, we can use Chinese comments. The completed code is as follows, which can save a lot of time and allow us to focus more on the logic flow of the algorithm.

import Flask

# 一个简单的基于 Flask框架的 webserver

class SimpleServer(object):

def __init__(self, ip, port, server_name):

self.ip=ip;

self.port=int(port);

self.name=server_name

def run(self):

app = Flask(__name__)

@app.route(“/”)

def hello():

return “Hello, %s!”%(self.name);

print(app.url_for(‘index’))

if __name__==”__main__”:

srvr=SimpleServer(192.168.0.100,8081,’server1′);

srvr.run()

Sky-code already supports the use of plug-in extensions on Visual Studio Code, and will support a series of common IDEs and editors such as Visual Studio, Neovim, and JetBrains in the future.

4. Image generation model

In terms of AI-generated pictures, with the continuous iterative upgrade of the algorithm model, AI painting has made great strides in both quality and speed. The GAN model released in 2014 not only generated poor quality pictures but also easily fell into model collapse. The Disco Diffusion model proposed in early 2022 can generate more diverse images, and the subsequently released DALL-E2 model can generate complete image content, and the Stable Diffusion model released by StabilityAI in August has achieved a qualitative breakthrough. It has even been able to generate works comparable to professional painters, and the efficiency of generating pictures has been shortened from hours to minutes or even tens of seconds. The Stable Diffusion model mainly uses the Diffusion Model. Simply understood, the Diffusion Model is the denoising self- The continuous application of the encoder, the process of gradually generating high-quality images, the following figure shows the architecture diagram of the Stable Diffusion model.

Kunlun Tiangong’s AI image generation model SkyPaint is based on the Stable Diffusion model. On the basis of retaining the original Stable Diffusion model that can generate images based on the input of English prompt words, it realizes the ability to input Chinese prompt words. SkyPaint uses 150 million levels The parallel corpus optimization prompt word model realizes Chinese-English comparison, not only involves translation task corpus, but also includes Chinese and English materials of prompt words frequently used by users, Chinese and English materials of ancient poems, subtitles corpus, encyclopedia corpus, picture text description corpus, etc. With a massive corpus collection of multi-task scenarios, the model has been deeply optimized to enable Chinese input prompts to obtain higher-quality images.

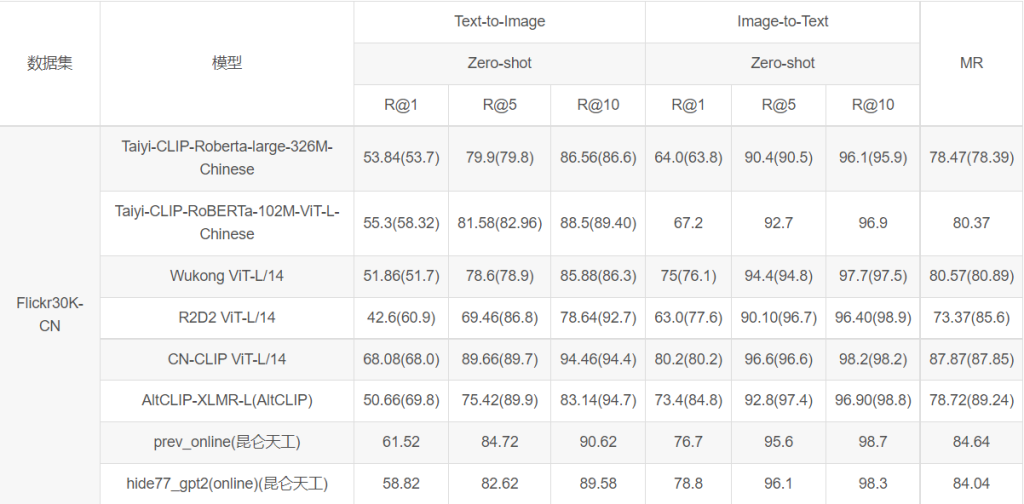

In the two applications of generating images from text and generating text from pictures, Kunlun Tiangong’s SkyPaint model is comparable to the most advanced model in the field of AI painting. The following table compares the performance of different models on the Flickr30K-CN dataset.

During model training, both model distillation and bilingual alignment schemes are used, and the teacher model is used to distill the student model while supplementing the decoder language alignment task to assist model training, so as to be closer to the Chinese language habits. What’s even more valuable is that SkyPaint has released a preview version of the WeChat Mini Program. I believe we can all experience the power of this model on WeChat soon.

I am very fortunate to be able to experience the powerful performance of Tiangongqiaohui SkyPaint trial version. You can see that even if we only give keywords, we can generate images with clear texture and high quality, and at the same time support the input of Chinese and English prompt words. If I don’t tell you This is an image created by AI, and many people may think that this is the work of a human painter.

Summary and Outlook

Finally, regarding the question “Should I be worried when AI learns to create?”, we can use ChatGPT’s answer to summarize. At present, artificial intelligence can assist humans to complete many complex tasks, but they still need human supervision and guidance. These artificial intelligence models still rely on the training of algorithms and data. Therefore, artificial intelligence and humans are complementary in many ways, rather than competing with each other. However, AIGC may have some potential hidden dangers, such as the content created by artificial intelligence may violate personal privacy or have other adverse effects. Therefore, humans need to carry out proper supervision and management of artificial intelligence to ensure that it will not cause harm to humans. In general, with the continuous in-depth research of AI engineers from companies such as Kunlun Wanwei, AIGC will continue to correct and improve, and continue to develop in a direction that is beneficial to human beings. We have reason to expect that various industries will be equipped with high-level AIGC assistants in the future.